2782 阅读 2020-02-07 18:00:21 上传

EEG Acquisition During the VR Administration of Resting State, Attention, and Image Recognition Tasks: A Feasibility Study

Greg Rupp1 , Chris Berka1 , Amir Meghdadi1 ,

Marissa C. McConnell1 , Mike Storm2 , Thomas Z. Ramsøy2 , and Ajay Verma3

1Advanced Brain Monitoring Inc., Carlsbad, CA, USA grupp@b-alert.com

2Neurons Inc., Taastrup, Denmark 3 United Neuroscience, Dublin, Ireland

Abstract. The co-acquisition of EEG in a virtual environment (VE) would give researchers and clinicians the opportunity to acquire EEG data with millisecondlevel temporal resolution while participants performed VE activities. This study integrated Advanced Brain Monitoring’s (ABM) X-24t EEG hardware with the HTC Vive VR headset and investigated EEG differences in tasks delivered in two modalities: VE and a desktop computer.

EEG was acquired from 10 healthy individuals aged 24–75 with a 24-channel wireless EEG system. This was synchronized with a resting-state eyes open/closed task in both dark and bright environments, a sustained attention task (3-Choice Vigilance Task, 3CVT) and an image memory task. These tasks, along with resting data, were collected in a VR-administered VE as well as on a desktop computer. Event-related potentials (ERPs) were investigated for target trials for SIR and 3CVT. Power spectral analysis was performed for the restingstate tasks.

A within-subject comparison showed no differences in the amplitudes of the Late Positive Potential (LPP) in 3CVT when comparing tasks administered in the VE and the desktop. Upon visual inspection, the grand average waveforms are similar between the two acquisition modalities. EEG alpha power was greatest during resting state eyes closed in a dark VR environment.

This project demonstrated that ABM’s B-alert X24t hardware and acquisition software could be successfully integrated with the HTC Vive (programmed using Unreal Engine 4). This will allow high quality EEG data to be acquired with time-locked VR stimulus delivery with temporal resolution at the millisecond level. As expected, a within-subjects analysis of cognitive ERPs revealed no significant differences in the EEG measures between Desktop and VR AMP acquisitions. This shows that the task administration in a VE does not alter the neural pathways activated in sustained attention and memory tasks.

Keywords: EEG • Event related potential Á Virtual Reality (VR) • Attention and memory • Integration

1 Introduction

A growing number of virtual reality (VR) devices are commercially available and deliver an immersive experience to the end-user. This technology was developed primarily for gaming and has been scaled such that the price point is not unreasonable for much of the population. The potential application of these VR systems to the research and clinical domains is considerable. Specifically, the potential co-acquisition of EEG and VR is of great importance as it would give researchers and clinicians the opportunity to acquire EEG data with millisecond level temporal resolution while the participant is immersed in a virtual environment (VE). Today’s VR technology allows for delivery of a realistic VE allowing comparisons of EEG metrics and responses evoked by VEs versus similar presentations on desktop or other 2D environments.

The amount of research involving EEG and VR is growing steadily. The efficacy of using VEs to enhance simulated environments has been demonstrated in a wide range of fields, from psycholinguistics to education to driving simulation [1–6]. In one study, VR and EEG were integrated into a Virtual Reality Therapy System that used EEG to measure VR-induced changes in brain states associated with relaxation [6]. It is clear that VR, used in combination with EEG, can be a tool for delivering and creating novel and enhanced environments designed for assessments or therapeutic interventions.

Advanced Brain Monitoring (ABM) has developed a collection of neurocognitive tests collectively referred to as the Alertness and Memory Profiler (AMP). AMP is a neurocognitive testbed designed to be administered while simultaneously recording EEG/ECG with a wireless and portable headset that acquires 20 channels of EEG and transmits digitized data via Bluetooth to a laptop. AMP consists of sustained attention, working memory, and recognition memory tests that are automatically delivered and time-locked to the EEG to generate event-related potentials (ERPs). In prior work ABM demonstrated the potential utility for ERP measures acquired with AMP as sensitive early stage biomarkers indicative of the cognitive deficits associated with Mild Cognitive Impairment.

In the present study, the integration potential of ABM’s B-Alert X24t headset with the HTC Vive™ VR headset was assessed. This was done in two ways: the physical integration of the hardware and software, and the EEG measures collected during the AMP tasks. The aim was to achieve millisecond level time syncing between the EEG and VR headsets, ensure comfort for the user, and ascertain whether or not there were significant EEG differences between tasks administered on the desktop computer and tasks administered in the VR environment.

2 Methods

2.1 Participants

Ten healthy individuals ranging from 24 to 75 years old were recruited and screened to serve as participants. Data was acquired at the study site at 9:00 am. The VR AMP and Desktop AMP acquisition were done at least one week apart (in no particular order) to minimize any practice and or/memory effects.

2.2 B-Alert® X24 EEG Headset

EEG was acquired using the B-Alert® X24 wireless sensor headset (Advanced Brain Monitoring, Inc, Carlsbad, CA). This system has 20-channels of EEG located according to the International 10–20 system at FZ, FP1, FP2, F3, F4, F7, F8, CZ, C3, C4, T3, T4, T5, T6, O1, O2, PZ, POz, P3, and P4 referenced to linked mastoids. Data were sampled at 256 Hz.

2.3 VR EEG Acquisitions

The participants were administered AMP that had been adapted to the HTC Vive™ Steam Software (Fig. 1). The participants were given a few minutes to acclimate themselves to the virtual reality room that simulated the room in which the acquisition was occurring.

Fig. 1. The B-Alert/HTC Vive integrated system

Fig. 2. The 3CVT task in the VE. A NonTarget image is being presented.

The protocol for the VR AMP acquisition was as follows:

• Resting State Eyes Open in a VE with normal indoor lighting (RSEO-Bright, 5 min)

• Resting State Eyes Closed in a VE with normal indoor lighting (RSEC-Bright, 5 min)

• 3-Choice Vigilance Task (3CVT test of sustained attention, 20 min)

• 10 min break

• Resting State Eyes Open in a VE without any lighting (RSEO-Dark, 5 min)

• Resting State Eyes Closed in a VE without any lighting (RSEC-Dark, 5 min)

The 3CVT requires participants to discriminate between Target, frequent stimuli (right side up triangle), from NonTarget and Interference infrequent stimuli (upside down triangle and diamond, Fig. 2). Afterwards, the original EO and EC tasks were repeated, but were performed within a darkened virtual room.

2.4 Desktop EEG Acquisition

The procedure was the same for the desktop EEG AMP acquisition. The only difference is that there were no RSEO or RSEC dark tasks, as light could not be effectively controlled in the room where the acquisitions occurred.

2.5 Power Spectral Density and Event Related Potential Calculation

For 3CVT, raw EEG signals were filtered between 0.1 and 50 Hz using a Hamming windowed Sinc FIR filter (8449 point filtering with a 0.1 Hz transition band width). For each event type, EEG data were epoched from 1 s before until 2 s after the stimulus onset. The baseline was adjusted using data from 100 ms before the stimulus onset. Trials were rejected if the absolute value of EEG amplitude in any channel during a window of −50 ms to +750 ms (compared to the stimulus onset) was larger than a threshold level of 100 lV. Independent component analysis (ICA) was performed using EEGLAB software to detect and reject components classified as having sources other than the brain. Moreover, epochs with abnormally distributed data, improbable data, or with abnormal spectra were also removed using Grand average of ERPs in each condition and trial type was calculated by using a weighted average using the number of ERPs in each condition as the weights.

For the resting state tasks, Data was bandpass filtered (1–40 Hz) Power spectral densities were computed using Fast Fourier Transform with Kaiser Window on one second windows with 50% overlap. The total power in each frequency bin 1 to 40 Hz and 9 frequency bandwidths were computed for each epochs and were averaged across all the epochs during each session. Artifact was detected using ABM’s proprietary artifact detection algorithms, and epochs contained more than 25% bad (having artifact) data points were excluded from analysis.

3. Results

3.1 Signal Quality

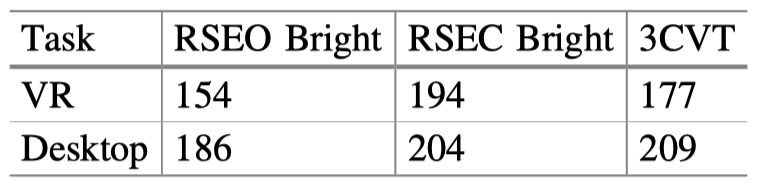

The signal quality during the VR EEG acquisition was comparable to that of the desktop acquisitions. Table 1 summarizes this data.

Table 1. Average number of clean epochs (or clean trials for ERP tasks) compared between VR and Desktop during each task

3.2 EEG Differences Between Desktop and VR Resting State in a Bright Environment

In all three conditions of resting state (Desktop Bright, VR Bright and VR Dark), as expected, alpha power was significantly greater (p < 0.01) at all channels during RSEC (eyes closed) compared to RSEO (eyes open). Figure 3 depicts this finding for Desktop Bright – VR Bright and Dark are not shown).

Fig. 3. Alpha power during resting state eyes closed compared to resting state eyes open for the desktop bright modality

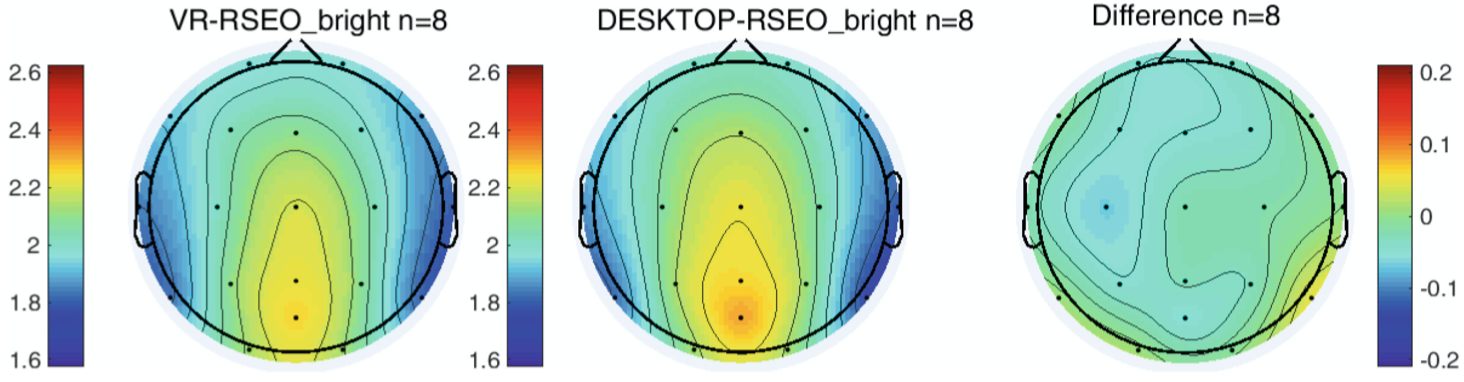

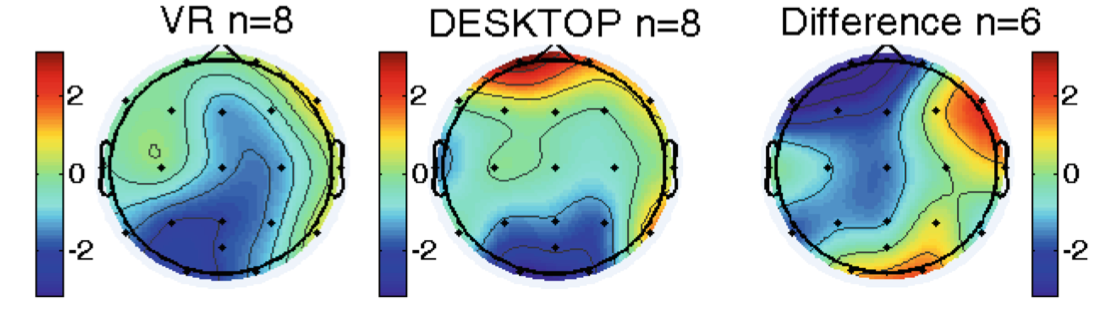

When comparing resting state EEG during VR and Desktop acquisitions, there was no within subject significant difference in Alpha power during RSEO (Fig. 4) and RSEC (not pictured).

Fig. 4. Alpha power during VR (left) and desktop (center) RSEO. The rightmost figure displays a difference map

3.3 Resting State Alpha Power in a Bright vs. Dark VR Environment

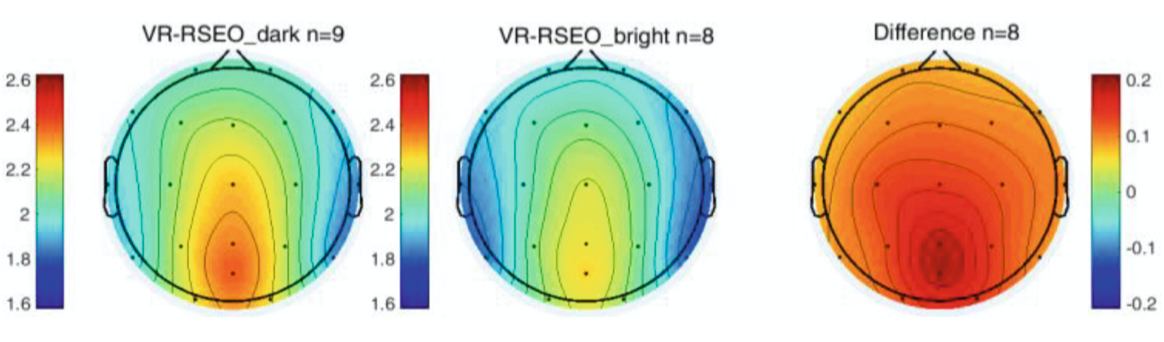

In VR during the eyes open task, alpha power was significantly greater (p < 0.01) in the dark environment compared to the bright environment at all channels (Fig. 5). There was no significant difference between the dark and bright environments with eyes closed.

Fig. 5. Differences in Alpha power between Dark and Light VEs for RSEO

3.4 Event Related Potentials and Performance During 3CVT

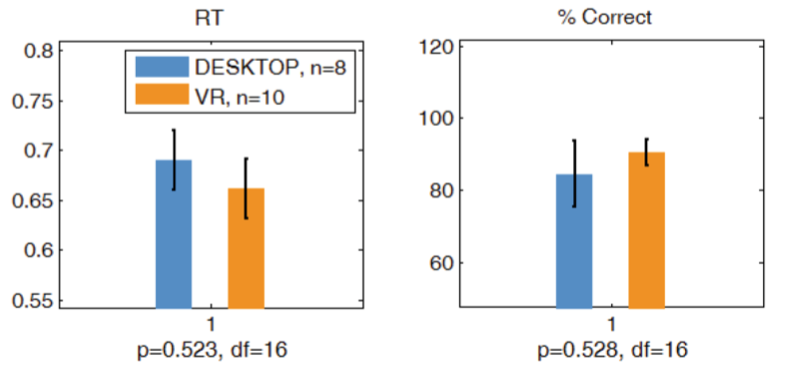

There were no significant performance differences between Desktop and VR 3CVT (Fig. 6).

Fig. 6. The performance metrics shown are reaction time (left) and percent correct (right)

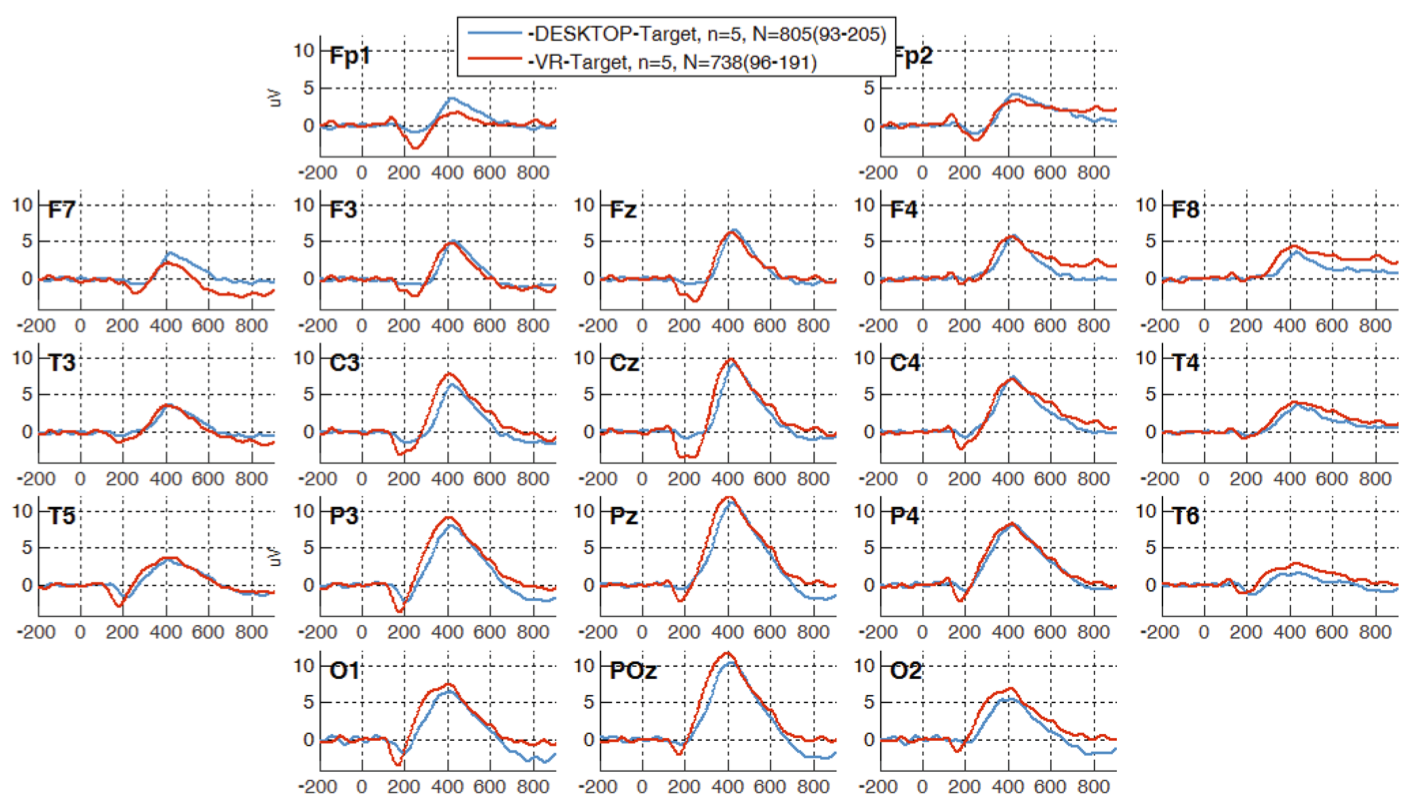

Figure 7 displays the grand average for the two 3CVT modalities examined in this study. Upon visual inspection, the 3CVT ERP waveforms from the Desktop and VR acquisitions do not significantly differ.

Fig. 7. Grand average plots for all participants for desktop and VR 3CVT Target (frequent) trials.

A within-subject comparison of the amplitude of the late positive potential (LPP) was computed for both Desktop and VR 3CVT Target (frequent) trials. There was no significant difference at any channel between VR and Desktop acquisitions (Fig. 8).

Fig. 8. LPP differences between VR and Desktop 3CVT Target (frequent) trials.

Additionally, the target effect, which is the difference in LPP amplitude between Target and NonTarget trials for each participant, is shown in Fig. 9. There was no significant difference in target effect between the VR and Desktop 3CVT.

Fig. 9. Target effect for VR and Desktop 3CVT

4 Discussion

This project demonstrated that ABM’s B-alert X24 hardware and acquisition software could be successfully integrated with the HTC Vive (programmed using Unreal Engine 4) to allow high quality EEG data to be acquired with time-locked VR stimulus delivery with temporal resolution at the millisecond level. As expected, a withinsubjects analysis revealed no significant differences in the EEG measures between Desktop and VR AMP acquisitions.

This preliminary evaluation suggests the integrated systems could prove useful for numerous clinical and research applications. However, there are several issues that will need to be addressed prior to widespread adoption of VR-EEG. The HTC Vive weighs 1.04 lb and exerts significant pressure on a subject’s face, causing discomfort and in some cases neck strain. The device is bulky with several heavy wires, making it somewhat difficult to be mobile while wearing the device. An improvement in form factor of the HTC Vive could ameliorate these problems and increase the comfort of the participant, allowing for longer acquisitions. There is also potential for further integration with the ABM EEG headsets. For example, the capability of integrating the EEG electrodes with the Vive headset would result in a lighter and more comfortable system.

Thus, the HTC Vive appears to be a promising tool for future research as it allows participants to be fully immersed in a given environment without changing the core neurological signatures associated with attention, learning and memory. There are many potential applications of VR as it offers realistic 3-D experiences, for example the measurement of Optic flow. Optic flow (OF) refers to the perception and integration of the visual field as one moves through a physical environment. Specifically “radial optic flow” involves the perception of motion around a central visual field when there is change in scenery from self-directed movement or from objects moving towards or away from the observer [7, 8].

Radial OF has been extensively studied in association with neurodegenerative disease, particularly Alzheimer’s disease (AD) and amnestic MCI (aMCI) [7–10, 11, 12]. Psychophysical testing confirmed OF impairment in AD but not in aMCI, however the neurophysiological studies revealed sensory (early components P100 and N130), perceptual and cognitive ERP component differences for OF tasks in AD and differences in the cognitive ERP components for aMCI during a variety of experimental paradigms designed to stimulate OF [7, 9]. The primary finding across studies of AD patients is longer latency and reduced amplitude of the P200. In one study of aMCI patients the latency of the P200 was inversely correlated with MMSE suggesting a link to cognitive decline.

These ERP changes are also highly correlated with impairments in navigational abilities in AD [7, 9, 11]. Interestingly, not all AD or MCI patients show the ERP changes and there have been several reports that older adults with no neurodegenerative disease evidence ERP change in OF and impaired navigational capabilities. Additional research is required to further delineate the differences across AD, MCI and controls, associate the findings with regional differences in structure or function (MRI, fMRI and PET) and to better understand the relationships with cognitive decline and navigational abilities.

There is currently no standardized method for testing patients for OF although OF deficiencies may be inferred following a comprehensive ophthalmological work-up [8]. There are multiple computerized tests as well as OF training protocols reported in the literature however, there is no consensus on the size, color, shape or other dimensions of the visual stimuli used to evoke OF. All these approaches are administered to nonmobile participants and thus can only evaluate the perceived movements within the display. In contrast, the virtual reality (VR) environment offers a rich array of potential test environments for simulating the 3-dimensional characteristic of true OF as encountered during locomotion.

However, this application of an integrated VR/EEG headset is not the only use case. VEs could also provide an opportunity to assess “skill in activities of daily living” embedding various tests of attention and memory such as recalling shopping lists, finding items in the store and adding up dollar amounts prior to check-out.

References

1. Baka, E., et al.: An EEG-based evaluation for comparing the sense of presence between virtual and physical environments. In: Proceedings of Computer Graphics International 2018, pp. 107–116. ACM, Bintan Island (2018)

2. Tromp, J., et al.: Combining EEG and virtual reality: the N400 in a virtual environment. In: The 4th Edition of the Donders Discussions (DD 2015), Nijmegen, Netherlands (2015)

3. Lin, C.-T., et al.: EEG-based assessment of driver cognitive responses in a dynamic virtual-reality driving environment. IEEE Trans. Biomed. Eng. 54(7), 1349–1352 (2007)

4. Makransky, G., Terkildsen, T.S., Mayer, R.E.: Adding immersive virtual reality to a science lab simulation causes more presence but less learning. Learn. Instr. 60, 225–236 (2017)

5. Slobounov, S.M., et al.: Modulation of cortical activity in 2D versus 3D virtual reality environments: an EEG study. Int. J. Psychophysiol. 95(3), 254–260 (2015)

6. Slobounov, S.M., Teel, E., Newell, K.M.: Modulation of cortical activity in response to visually induced postural perturbation: combined VR and EEG study. Neurosci. Lett. 547, 6–9 (2013)

7. Tata, M.S., et al.: Selective attention modulates electrical responses to reversals of optic-flow direction. Vis. Res. 50(8), 750–760 (2010)

8. Albers, M.W., et al.: At the interface of sensory and motor dysfunctions and Alzheimer’s disease. Alzheimers Dement 11(1), 70–98 (2015)

9. Yamasaki, T., et al.: Selective impairment of optic flow perception in amnestic mild cognitive impairment: evidence from event-related potentials. J. Alzheimers Dis. 28(3), 695708 (2012)

10. Kim, N.G.: Perceiving collision impacts in alzheimer’s disease: the effect of retinal eccentricity on optic flow deficits. Front Aging Neurosci. 7, 218 (2015)

11. Hort, J., Laczó, J., Vyhná Lek, M., Bojar, M., Bureš, J., Vlček, K.: Spatial navigation deficit in amnestic mild cognitive impairment (n.d.)

12. Kavcic, V., Vaughn, W., Duffy, C.J.: Distinct visual motion processing impairments in aging and alzheimer’s disease. Vis. Res. 51(3), 386–395 (2012). https://doi.org/10.1016/j.visres. 2010.12.004

涉及工具