1291 阅读 2020-03-02 11:39:40 上传

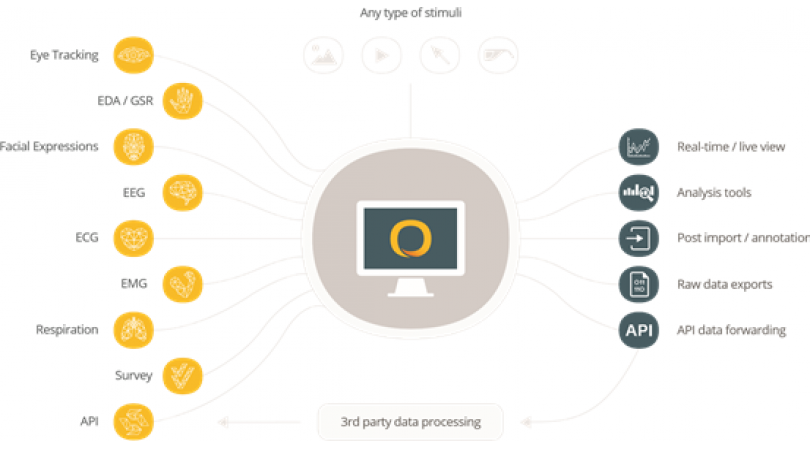

This is the Stanford Automotive Innovation Facility.I'm in charge of the driving simulator Lab where we work on experiments in our driving a simulator,But also on some on the road simulators looking at how people behave in get your driving contexts.This is an environment where people are real in close proximity with machines and cooperating with them all the time.And so this is a really great environment to do.User research and one of the things that's been wonderful about is that we're able to monitor people,See how they perform,Measure that very carefully and look at how they react.So we are using these technologies to help us look to see where people are looking when they're driving to see how they're responding,But you see the facial action coding and we are interested in how they respond physiologically to think there have to be like if they are driving down the road and so on tops out.There's a heart rate change to cause their galvanic skin response changed.So in an academic environment,There is always a temptation to throw your own sensors to make your own.Software and one of the things that we've discovered over a long term doing research that's really important to use standard really well respected platforms to do to do that.But first of all,It just makes it so that you are actually able to focus on research,Not spending all your time,Fixing things that you kind of strung together,And we're at this point where it's not The fact that we have additional compensation are additional sensing,But our ability to knit these things together.It becomes really important and so basically figure out how to integrate data derive a larger picture from that is really what's important.So this is one of the world's most advanced simulators.We have a digital instrument panel up here,We have a touch screen here and we can go and display anything that we want on these screens to provide information to the drivers.We have a wrap around two hundred and seventy degree arc screens surrounding us,So you can see that it's really immersive mean it feels like we're in a city,So on this screen,In the front view of what the driver sees.So this is the overhead drivers could perspective,Then on the screen,The high tracking up on the top.This was the driver's eye view and then on the bottom,We have information piped in from the simulator,So we can see the throttle angle.We can see a break for us who can see the steering angle and we can see event triggers.So in the simulation we have to build events in so car,Cutting you off or pedestrian coming out into the road.When something happens that the driver has to react to,We need to see how long does it take for the driver to react?And then how do they react when we get all that information piped in from the simulator to our emotions,Attention tool,And then we can go on and look at the other indicators.So whether it's the driver looking at,We can integrate that with the eeg data,Gsr and heart rate,And also look at the driver's emotional state using the facet of analysis.So having more information streams that are act,They're integrated into the same tool simplifies our understanding of what's going on.So what we can do is we can put out publications faster.We don't have to go and spend weeks or months coding data and trying to line up the time on the different data streams so we can go to turn out papers faster,And we can also have with all the new information that can have a more thorough research.So it's really important to have the data to be good.It's really important that all your data is synchronized,And it's really important that that torture is like well understood and completely transparent.We take these things really seriously.You know how we collect the data of making sure that it's good of validating that it synchronized.I'm anything that helps us do.That is basically saving us time and money and happiness can be substantial and the wonderful thing about our driving simulator as it lets us by a little piece of the future,Or sometimes a little piece of the alternative present where you can do things that aren't safe to do on the real road,Or you can put things in a car that are in the car right now.We can fake technologies that don't exist yet,Like cars that can talk to you in real speech about the real context about why things are happening from this point out where we're looking at ways that machine and humans can collaborate on a scaffold each other far more.If you just think about the driving past,There are things that people can see and sense and predict,And there are things that machines can see and predict.And I think that the big challenges right now,It's very difficult for people and machines communicate,So that's a lot of what we're working on and we're able to use these wonderful tools and this controlled environment.It's early this one.